My homelab is something I get very little time to work on but I've been wanting to upgrade from Portainer and multiple instances running docker. I'd been wanting to play with Kubernetes and setting up my own cluster to play around with. In these posts I plan to install kubernetes on my new Rock Pi's and migrate my homelab into the new cluster.

To start...

I decided to boot up a VM on my proxmox instance running Ubuntu 20.04. This is going to be my master node for my K3s until I decide to use another instance. Then I will install K3s onto my two Rock Pi's, one will be agent node and the other will be an agent node with storage (nvme).

As part of this, I created a Github repo with some of my configuration examples. Feel free to use these to get started yourself: homelab-k3s repo

Contents

Setting up the Nodes

I created a virtual machine on my proxmox server. I already have a template in proxmox that is Ubuntu 20.04. I booted up the image with cloud-init and set a ssh key so that I could access the instance.

ssh master-node

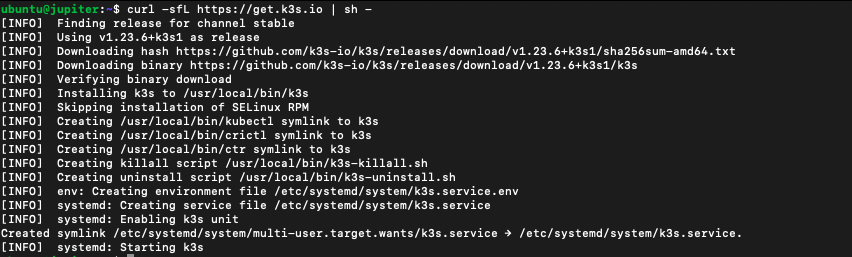

From here I installed K3s via the installer script:

curl -sfL https://get.k3s.io | sh -

I then grabbed the token from the server and used it on my two Rock Pi 4's using:

sudo cat /var/lib/rancher/k3s/server/node-token

Then on my nodes I ran:

curl -sfL https://get.k3s.io | K3S_URL=https://{server.hostname}:6443 K3S_TOKEN={token} sh -

Labelling nodes

I used kubectl to label my nodes as workers:

kubectl label node {name} node-role.kubernetes.io/worker=worker Setting up dashboard

On my Mac I've installed kubectl with brew and I grabbed a copy of the k3s config file from my master node:

sudo cat /etc/rancher/k3s/k3s.yaml

I edited my local kube config:

nano $HOME/.kube/config

I replaced the server IP address with my master node IP address (or in my case a local dns entry).

Next I created a folder to store my yamls called k3s and I created a new folder called dashboard.

Adding two files in the folder dashboard:

dashboard/dashboard.admin-user.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboardand:

dashboard/dashboard.admin-user-role.yml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboardI was then able to run the following my k3s to create the dashboard:

kubectl create -f dashboard.admin-user.yml -f dashboard.admin-user-role.yml

Next I needed to grab the access token for the user created above:

kubectl -n kubernetes-dashboard describe secret admin-user-token | grep '^token'

This returned a token for me to use at login, now I can start a proxy to the dashboard with:

kubectl proxy

It starts up and runs on localhost:8001!

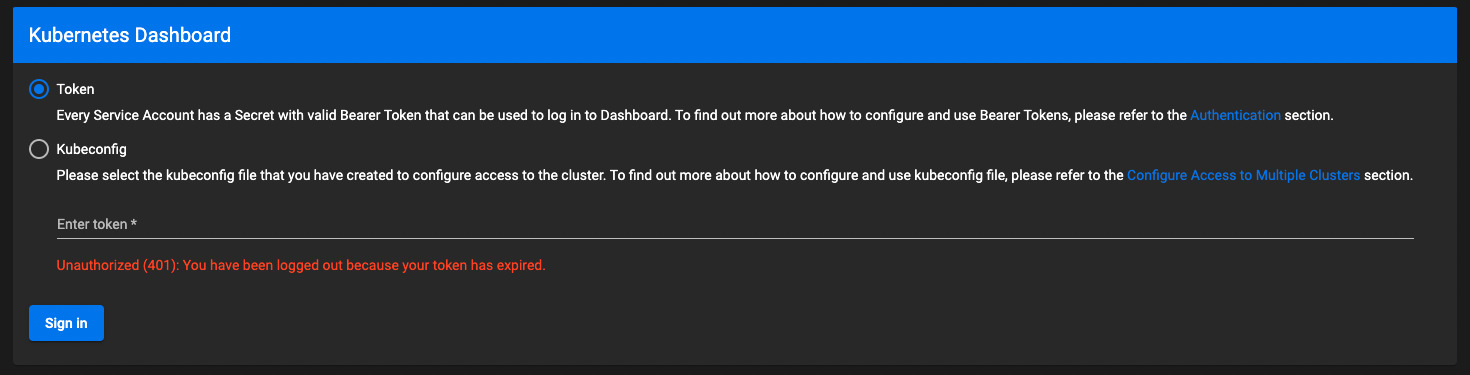

I went to the following url and pasted my token in: http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

Dashboard:

Moving on to helm

After playing around with the dashboard, I decided it was time to update everything I had to use Helm charts instead and get Rancher dashboard along with Longhorn configured.

Preparing to use Helm

Since I am on mac I decided to brew helm for simplicity:

brew install helm

With helm installed I decided to pop a few helm repos for usage, omit as you may need:

helm repo list

NAME URL

longhorn https://charts.longhorn.io

jetstack https://charts.jetstack.io

metallb https://metallb.github.io/metallb

ingress-nginx https://kubernetes.github.io/ingress-nginx

kubernetes-dashboard https://kubernetes.github.io/dashboard/

rancher-stable https://releases.rancher.com/server-charts/stableInstall whichever with: helm repo add {name} {url}

With those repos configured I cleared down my services and nodes on the cluster already and prepared to start setting up from scratch.

Making changes to the cluster

Firstly I decided that I would use MetalLB and Nginx ingress for networking.

MetalLB is essentially a load balancer that will take some ip addresses from your network and use them to load balance services.

Nginx ingress is to replace the traefik ingress that comes with k3s. I'm a big fan of nginx and how it works, nothing wrong with traefik though just have a little more experience with how nginx is going to work.

ssh master-node

To save time, I basically uninstalled everything and reinstalled with:

curl -fL https://get.k3s.io | INSTALL_K3S_EXEC="--node-ip x.x.20.1 --disable traefik --disable servicelb" sh -

Repeating the steps on the worker nodes and updating their TOKEN's as done in the previous steps.

Configuring MetalLB for load balancer

Step 1 is to create a subnet file for MetalLB configuration. What I've done is created folders to separate what configs I'm using (see the github project if you want to see a copy of the configs).

loadbalancer/subnet.metallb.yml:

configInline:

address-pools:

- name: default

protocol: layer2

addresses:

- x.x.20.200-x.x.20.250Replace all 'x' with the subnet range you are using.

With that complete, I've then run the helm install command:

helm install metallb metallb/metallb -f loadbalancer/subnet.metallb.yml -n metallb-system --create-namespace

This has created MetalLB items under the metallb-system namespace. As we don't have the ingress setup we can't see the ip being set yet.

Installing nginx ingress

Now with the load balancer configured, its time to create an ingress for networking.

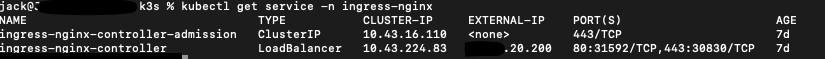

helm upgrade --install ingress-nginx ingress-nginx/ingress-nginx --namespace ingress-nginx --create-namespace

Now with it installed running: kubectl get service -n ingress-nginx there should be a controller service for 'LoadBalancer' which should have an IP address assigned from the MetalLB system and a ClusterIP.

Installing Cert Manager optional

Since I'll be making sure to use HTTPS or TLS where I can, I decided to install Cert-Manager and the cmctl tool on my mac.

brew install go

Step followed from cert-manager.io:

OS=$(go env GOOS); ARCH=$(go env GOARCH); curl -sSL -o cmctl.tar.gz https://github.com/cert-manager/cert-manager/releases/download/v1.7.2/cmctl-$OS-$ARCH.tar.gz tar xzf cmctl.tar.gz sudo mv cmctl /usr/local/bin

I might end up using the tool at some point but for now I am going to install cert-manager via Helm and create a CA cert to use for self signing nginx ingress.

helm install cert-manager jetstack/cert-manager --namespace cert-manager --create-namespace --version v1.8.0 --set installCRDs=true

make sure to install the latest stable version, at time of writing v1.8.0 is latest

ca.cluster.yml:

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: {domain}-local-ca

namespace: cert-manager

spec:

isCA: true

commonName: {domain}.tld

secretName: {domain}-tld

privateKey:

algorithm: ECDSA

size: 256

issuerRef:

name: {domain}-self-signed-issuer

kind: ClusterIssuer

group: cert-manager.io

---

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: {domain}-self-signed-issuer

spec:

selfSigned: {}

---

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: {domain}-cluster-issuer

spec:

ca:

secretName: {domain}-tldReplace {domain} with the domain name, for me I am using my iamjack.local domain, so {domain} = iamjack, tld = local

To verify the clusterissuer is available:

kubectl describe clusterissuer

You can also check if the certificate was generated:

kubectl get certificate -n cert-manager

Mine looks like:

NAME READY SECRET AGE

iamjack-local-ca True iamjack-local 23mSetting up Dashboard on nginx ingress

Firstly, I created a small values file to use to create a basic setup:

dashboard/dashboard.values.yml:

ingress:

hosts:

- k8.{domain}

enabled: true

annotations:

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"Then running the following will create the required items:

helm install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --namespace {namespace} -dashboard -f dashboard/dashboard.values.yml --create-namespace

I needed to make a few additional items to get everything working:

Firstly, created a admin user and role:

dashboard/dashboard.user.admin.yml:

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: {namespace}

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: {namespace}Then adding an ingress configuration to allow for access to the dashboard via the ingress controller.

dashboard/dashboard.ingress.yml:

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: {domain}-cluster-issuer

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

name: ingress-dashboard

spec:

rules:

- host: k8.{domain}

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kubernetes-dashboard

port:

number: 443

tls:

- hosts:

- k8.{domain}

secretName: k8-dash-cert

ingressClassName: nginxThen apply the configuration:

kubectl apply -f dashboard/dashboard.user.admin.yml -f dashboard/dashboard.ingress.yml -n {namespace}

Now the dashboard should be running as a service:

kubectl -n {namespace} get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard ClusterIP 10.43.205.28 <none> 443/TCP 7d4hGreat! Looks good, lets check the nginx-ingress to see if it is running:

kubectl -n {namespace} get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-dashboard nginx k8.{domain} x.x.20.200 80, 443 7d3hLooks good, time to the token and login:

kubectl -n {namespace} describe secret admin-user-token | grep '^token'

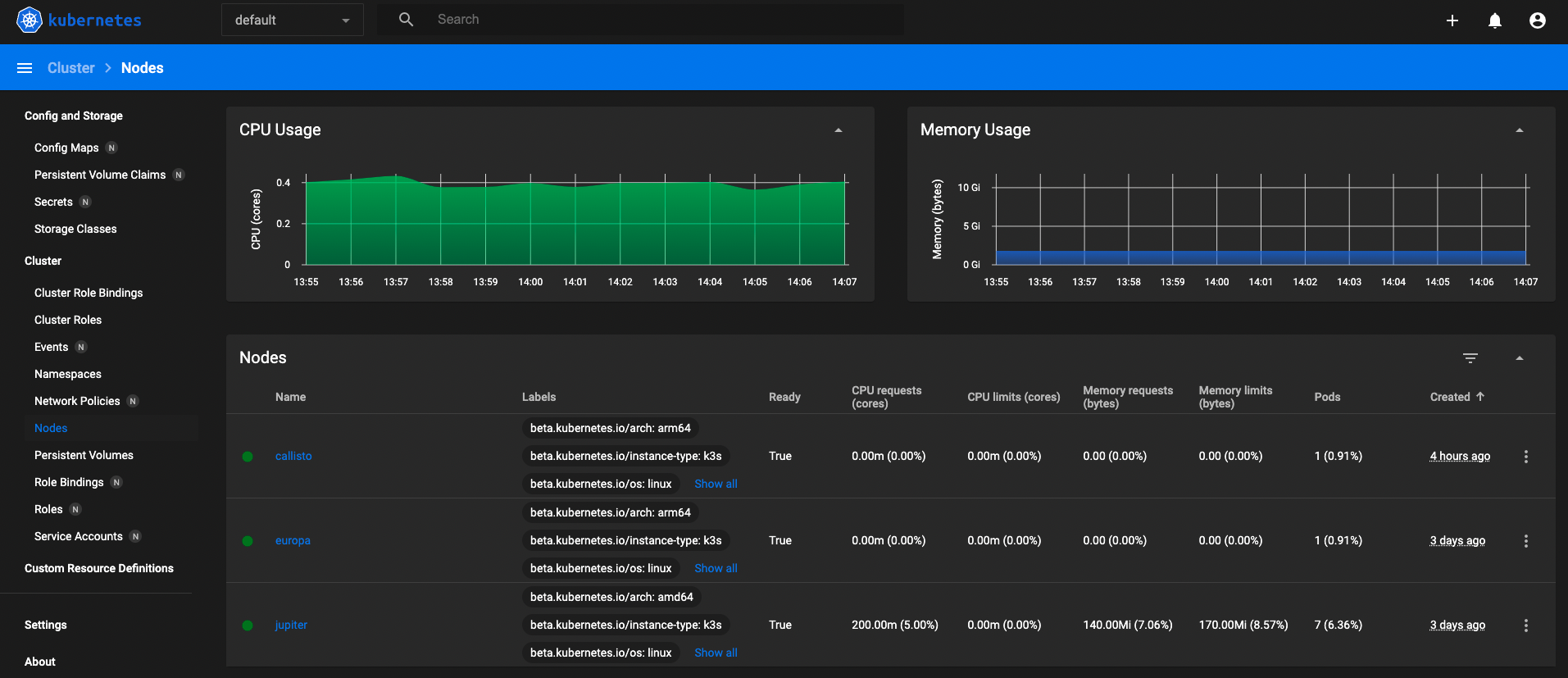

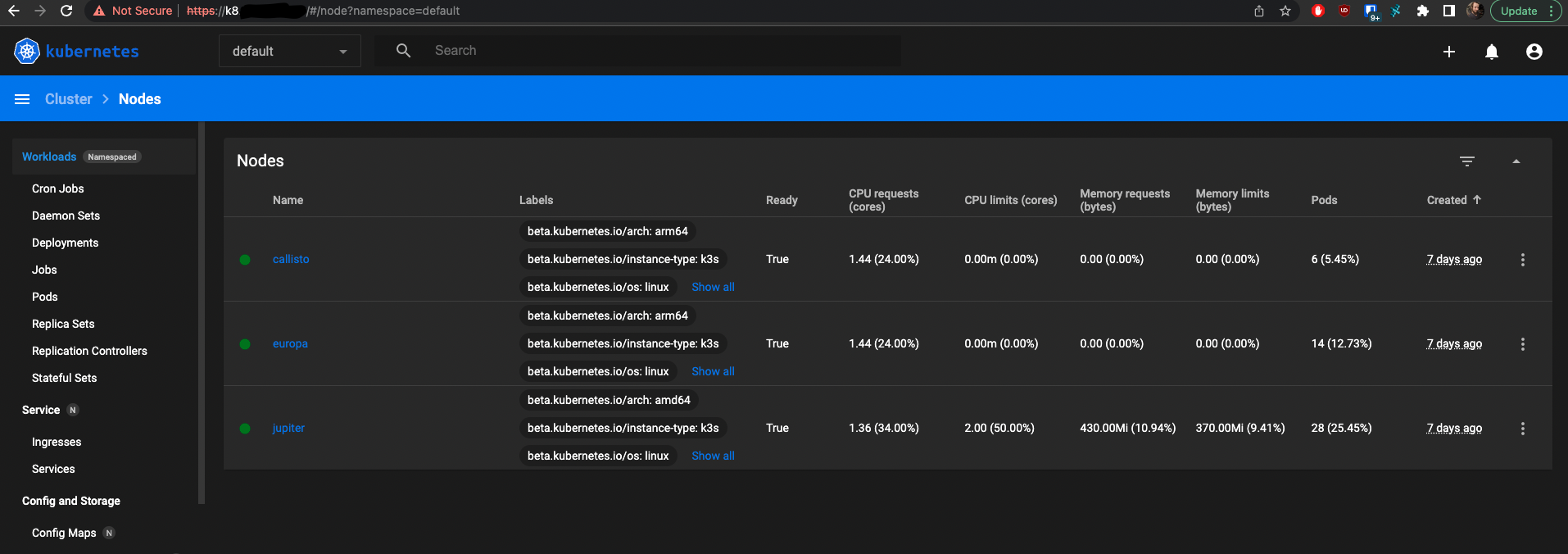

Grabbing the token I goto login using my url and I can get to the nodes:

Conclusion

Now that I've got K3s setup on my instances along with Certmanager, nginx ingress and cert manager with the kubernetes dashboard. My next step is to work on getting the Rancher Dashboard, Longhorn and my first service setup so that I can start migrating away from my docker-compose setup.

In the next part I will setup Rancher, Longhorn, Vault and Vaultwarden.